Key Impact

- •17,000 clients served through AI chat

- •3,199 managed Q&A pairs in knowledge base

- •400+ customer conversations handled

- •Observability layer shapes support strategy

The Problem

The client, a self-directed IRA provider, serves 17,000 customers navigating complex retirement account rules. Their support team was overwhelmed with repetitive questions:

- "Can I invest my IRA in real estate?"

- "What are the contribution limits for 2024?"

- "How do I rollover my 401k?"

The Solution

I built a comprehensive AI chat system with two components: a customer-facing chat widget and an admin platform for knowledge management.

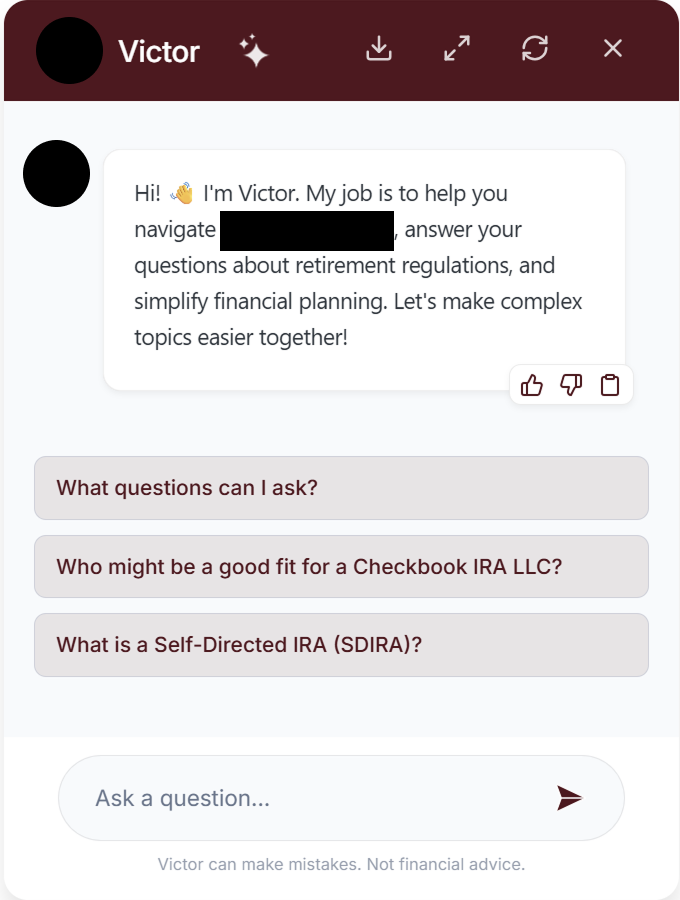

Customer Chat Widget

An embeddable chat widget that customers interact with directly on the client's website. The system uses RAG (Retrieval-Augmented Generation) to provide accurate, sourced answers.

How It Works:

1. Customer asks a question in natural language 2. System retrieves relevant knowledge base entries using semantic search 3. LLM generates a response grounded in retrieved content 4. Response includes citations so customers can verify

The chat handles the full range of IRA questions: contribution limits, prohibited transactions, rollover rules, investment options. When it can't answer confidently, it escalates to human support.

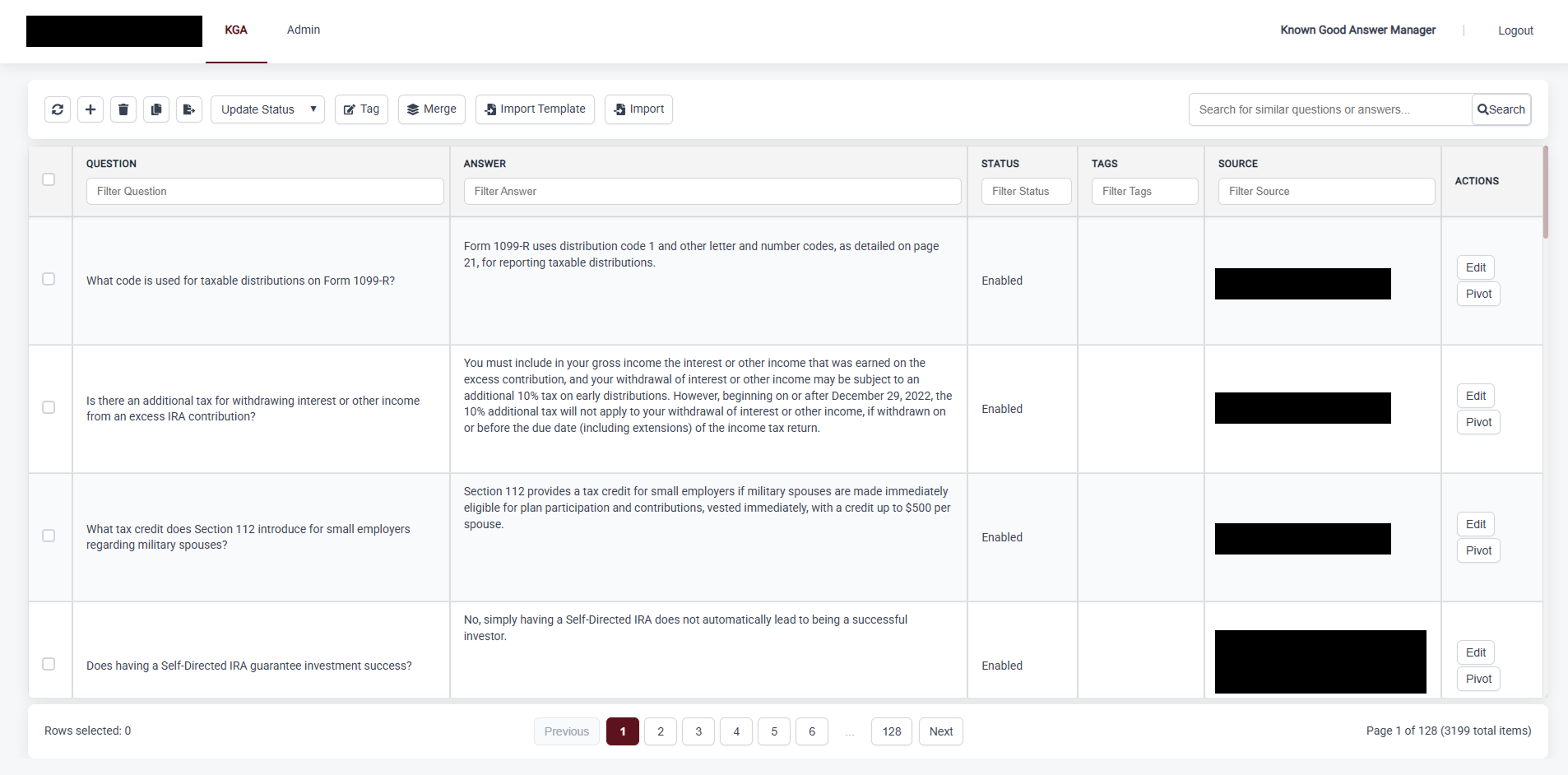

Knowledge Base Management

The real power is in the admin tooling that keeps the knowledge base current and comprehensive.

Content Ingestion:

Admins can add knowledge from multiple sources:

- URL crawling (pull content from existing web pages)

- PDF upload (product guides, compliance documents)

- Manual Q&A entry

Semantic Search:

The admin interface includes powerful semantic search across all knowledge. Staff can find related content, identify duplicates, and ensure consistency.

Gap Identification:

This is the key feature. The system tracks questions customers ask that don't have good answers. Unanswered question tracking surfaces gaps in the knowledge base, showing exactly what content needs to be created.

Observability Layer

Built comprehensive analytics that help shape support strategy:

- Escalation rates: Which topics require human handoff?

- Customer feedback: Thumbs up/down on responses

- Top questions: What are customers asking most?

- Coverage gaps: Questions without confident answers

Technical Architecture

RAG Pipeline:

- Azure AI Search for semantic retrieval with hybrid (keyword + vector) search

- Chunked documents with metadata for source attribution

- GPT-4 for response generation with retrieved context

- Embeddable React widget for easy integration

- Conversation state management for multi-turn dialogue

- Graceful degradation when AI is uncertain

- Full CRUD for knowledge base entries

- Bulk import/export capabilities

- Analytics dashboards for operational insights

Results

Delivered in approximately 6 weeks, the system transformed customer support:

- 17,000 clients can now get instant answers

- 3,199 Q&A pairs in the managed knowledge base

- 400+ conversations handled through the chat

- Support team focuses on complex issues, not repetitive questions